TL;DR

Explainable AI and model interpretability make AI decisions transparent and understandable, which is essential for building trust and accountability in the age of complex, generative AI.

Who should read this?

Data professionals, business leaders, analysts, and anyone adopting or working with AI-driven tools.

Our New Best Friends

A million dollar "thank you" - We don't even question how ChatGPT answers the most sophisticated questions or how Midjourney generates the most artistic images, although they very quickly became an essential part of our lives. In the rush to embrace these generative AI marvels, the question of why and how these models arrive at their outputs is often left unexplored. Yet, as AI systems become more integrated in decision-making processes that affect finances, healthcare, and daily operations, the need for interpretability, i.e. being able to explain and trust model decisions, is now more crucial than ever.

It is no secret that there is a huge computing power behind all these artefacts, even a response to a simple "thank you" to a chat bot, proved recently by Sam Altman, the CEO of ChatGPT. Altman revealed that being kind and thoughtful to your AI chat bot, such as saying "please" and "thank you", could cost millions of dollars in processing power, when they add up. But how many of us really understand how ChatGPT works? Why do we even feel the need to say "thank you" to a machine learning model (more specifically, Generative Pre-trained Transformer, hence the GPT abbreviation) comprised of various algorithms, functions and parameters; in its essence a piece of code?

Real-World Examples: Why Model Interpretability Matters

"Give me a SQL query that calculates …" - Imagine a bank denying your loan application, a hospital recommending you a treatment that doesn't look like a good fit to what you think your sickness is, or an e-commerce platform flagging your transaction as fraud while your transaction is 100% genuine, all powered by machine learning models. In each case, you need to understand why the model made its decision. Without interpretability, we risk blindly trusting black-box systems, potentially reinforcing biases or making costly errors. Leaving the loan application and e-commerce transaction examples aside, everyone's health is always their top priority, so you will naturally want to know exactly why you are recommended by that treatment.

Let's look at a more practical, a data analyst using ChatGPT to generate a complex SQL query must fully understand, maintain, and be accountable for the query's logic and the decisions made from its output. They should be able to answer any question that may be raised after presenting the results. Getting results with AI tools is quick, however, understanding these results is often the most time consuming part, especially if you don't have the expertise in subject matter, in this case, SQL. If we are using AI products and acting on their results, we must be accountable for those outcomes.

What is Explainable AI and/or Machine Learning Interpretability?

Explainable AI (XAI) refers to methods and tools that make the decisions of AI and machine learning models understandable to humans. Interpretability is about how easily a person can grasp why a model made a particular prediction or decision. While interpretability focuses on the transparency of the model's internal logic, explainability goes further by providing clear reasons for specific outputs, often after the model has made its prediction. Both are crucial for building trust, identifying errors or biases, and ensuring accountability when AI is used in real-world, high-stakes settings like finance or healthcare. As AI models become more complex, explainable AI and interpretability help ensure that humans remain in control and can justify or challenge automated decisions.

Interpretable by Design

Models that explain themselves. Not all interpretability is an afterthought. Some models are inherently interpretable by design, meaning their structure is simple enough for humans to understand without extra tools. Examples include:

- Linear Regression: Each feature's coefficient directly shows its effect on the outcome.

- Logistic Regression: Extends linear regression for classification, with interpretable weights.

- Decision Trees: Visualize the path from input features to predictions, making the logic transparent.

- Rule-Based Systems: Use human-readable "if-then" rules to make decisions.

These models are ideal when interpretability is as important as predictive power-for instance, in regulated industries or high-stakes decision-making.

Model-Agnostic Methods: SHAP Example

"One method to explain them all…" There are different type of interpretation methods but for the sake of simplicity, let's focus on the model-agnostic ones. For complex, high-performing models like random forests or neural networks, interpretability often comes from post-hoc (after training) methods. Among these, SHAP (SHapley Additive exPlanations) stands out for its theoretical rigor and practical utility.

SHAP and Shapley Values:

- SHAP uses Shapley values from cooperative game theory to fairly attribute a model's prediction to its input features.

- Each feature's SHAP value represents its contribution to pushing the prediction higher or lower compared to a baseline (average prediction).

- SHAP satisfies key properties like efficiency, symmetry, and additivity, making its explanations robust and trustworthy.

Global vs. Local Explanations:

- Global interpretability shows how features influence predictions across the entire dataset, helping understand overall model behavior.

- Local interpretability explains individual predictions, revealing which features mattered most for a specific decision-crucial for debugging, trust, and accountability.

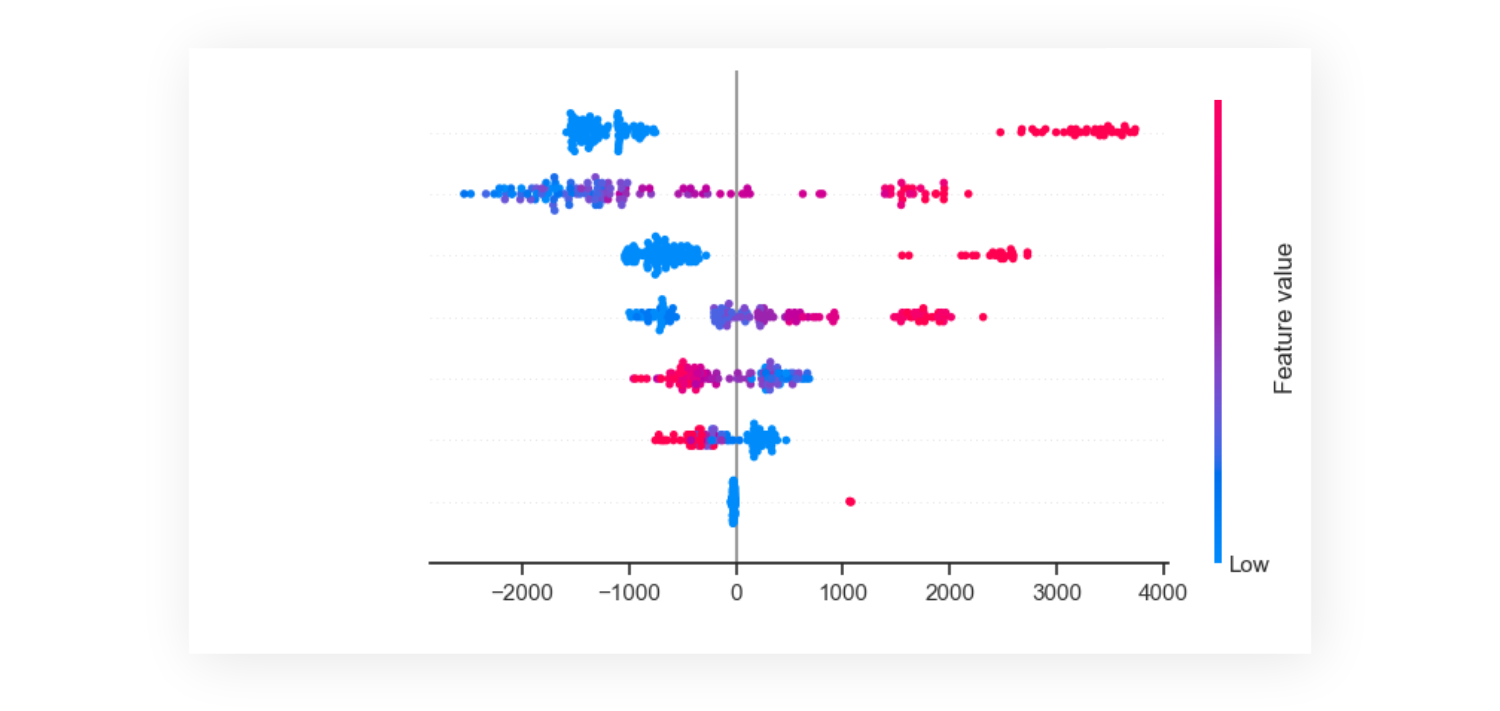

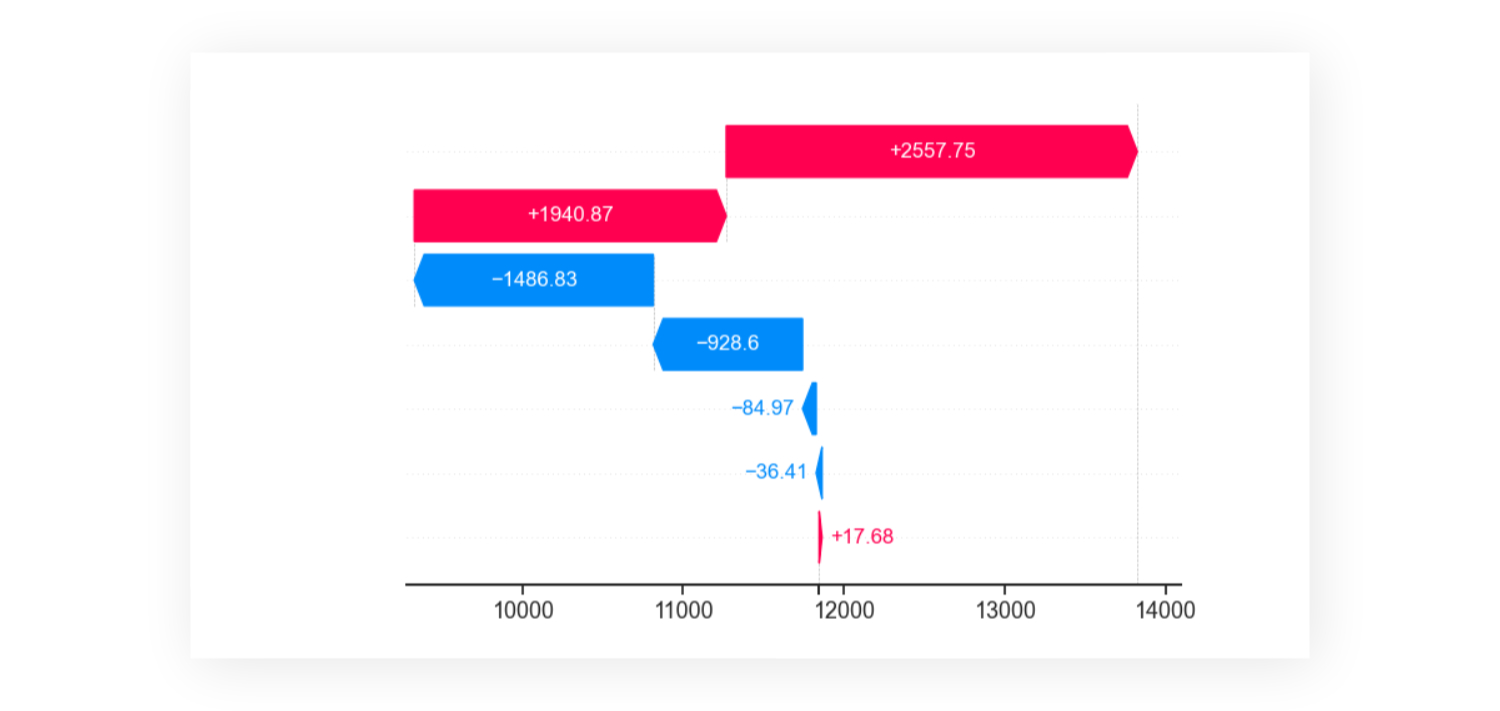

Visualizing SHAP:

SHAP plots, such as summary and waterfall plots, make these explanations accessible to both technical and non-technical audiences. In my portfolio, I used SHAP plots to explain how a random forests model predicted customer numbers for a company. These visualizations helped C-level stakeholders see which factors (e.g., marketing spend, seasonality) most influenced customer growth, bridging the gap between data science and business strategy.

Reflections on Data & AI Literacy

You can't read without learning the alphabet. Explainable AI and model interpretability are closely tied to data and AI literacy-the ability to critically evaluate, communicate, and use AI technologies effectively. When AI systems provide clear, understandable explanations, they help users develop more accurate mental models of how these systems work, which in turn improves trust and appropriate use. Research shows that users with higher AI literacy are better equipped to interpret and act on AI recommendations, especially when explanations are provided. This means that as organizations and individuals become more data and AI literate, they can better leverage the benefits of explainable AI, make informed decisions, and maintain accountability for AI-driven outcomes. In practice, fostering both explainability and AI literacy empowers users to question, validate, and responsibly use AI in their daily work and decision-making.

Accountability and the Human Aspect

Keeping human in the loop. As AI systems shape decisions, accountability cannot be delegated to algorithms. In the data analyst example above, they must understand, maintain, and stand behind the results. This principle extends to all AI-powered decisions: users, developers, and organizations share responsibility for outcomes, making explainability not just a technical challenge, but a core ethical and operational requirement.

Explainable AI is not just a technical luxury; it's a necessity for trust, accountability, and responsible AI adoption. As generative AI systems become more pervasive and powerful, the ability to interpret and explain their decisions must keep pace. Whether through models that are interpretable by design or advanced tools like SHAP, explainability ensures that humans stay in control, informed, and accountable in the age of AI.